There is growing evidence that clinicians’ workload adversely affects clinical performance and contributes to adverse events and lower quality of care. Unfortunately, current methods of measuring clinical workload are crude (e.g., nurse-patient staffing ratios), retrospective (based on the volume of work units performed), and do not apply to the unique model of the one-patient-at-a-time care performed in the operating room (OR). To address this problem, colleagues at Vanderbilt University and the Tennessee Valley VAMC have piloted an instrument, the Quality and Workload Assessment Tool (QWAT), to measure the perceived clinical workload of individual nurses, surgeons, and anesthesia providers, as well as that of the surgical team as a whole. The QWAT also elicits data about intraoperative “non-routine” events (or NRE). NRE represent deviations from optimal care and thus may be a measure of quality of care. A conceptual model has been developed suggesting that performance-shaping factors (e.g., clinician fatigue or stress) contribute to clinical workload that, in turn, affects patient outcomes. In this multi-center project, we are testing this conceptualization of surgical clinical workload and its mediating effects on intra-operative quality of care and patient safety.

This project will contribute significantly to our understanding of the factors affecting the conduct and quality of surgical care and be an initial step toward toward identifying early warning signs of suboptimal and unsafe processes. These results will provide a more rational basis for improving working conditions, clinician training and staffing, care processes, and technology design.

New technologies are expanding the amount of information available to health care practitioners. In the perioperative environment, this includes an increase in patient-monitoring data. The dynamic nature of the perioperative environment makes it especially susceptible to problems of information overload. There is a need for a holistic and human centered approach in the analysis and redesign of perioperative information displays. The main hypothesis for this research is that the application of human factors design principles and the use of a human centered design process will lead to the design of perioperative information displays that improve patient care compared to current systems.

This research project involves four main components:

- Identification of human factors design principles based on contemporary theories of human decision making, situation awareness, and teamwork that are relevant to the dynamic, mobile, risky, team-based, and information-rich perioperative environment.

- The application of cognitive task analyses and knowledge elicitation methods to identify the important information requirements for the perioperative environment.

- The design of perioperative information management systems using a human-centered approach that includes a process of iterative user evaluation and redesign.

- Comparison of the new designs with conventional perioperative information displays under anesthesia crisis management scenarios using a human patient simulator.

In addition to the potential to improve patient safety through better information management in the perioperative environment, the results of this effort will have implications for:

- Training in the perioperative environment.

- System design in other dynamic, safety-critical health care environments.

Funding: NIH Agency for Healthcare Research and Quality.

One major limitation in the use of human patient simulators in anesthesiology training and assessment is a lack of objective, validated measures of human performance. Objective measures are necessary if simulators are to be used to evaluate the skills and training of anesthesia providers and teams or to evaluate the impact of new processes or equipment design on overall system performance. There are two main goals of this project. The first goal is to quantitatively compare objective measures of anesthesia provider performance with regard to their sensitivity to both provider experience and simulated anesthesia case difficulty. We are comparing previously validated measures of anesthesia provider performance to two objective measures that are fairly novel to the environment of anesthesia care: an objective measure of provider situation awareness and a measure of provider eye scan patterns. The second goal of this project is to qualitatively evaluate the situation awareness and eye tracking data to identify key determinants of expertise in anesthesia providers. These determinants of expertise may then be used to further enhance objective measures of performance as assessment tools and to inform training of anesthesia providers.

Funding: Anesthesia Patient Safety Foundation and the recipient of the Ellison C. Pierce, Jr. Education Research Award.

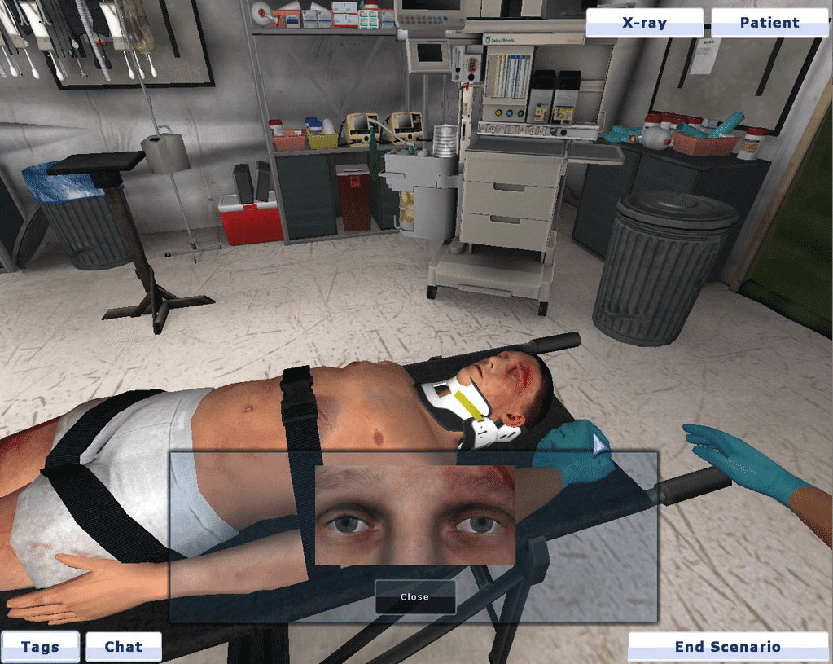

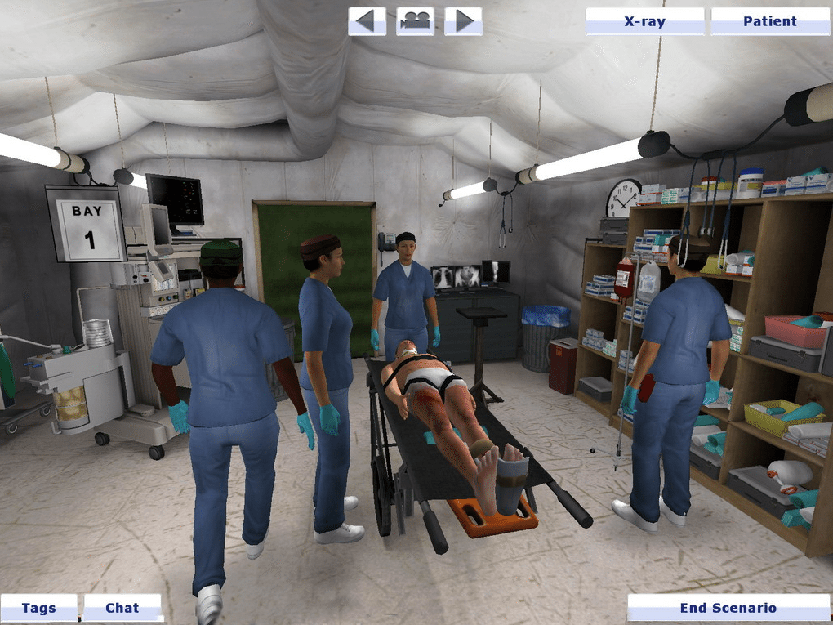

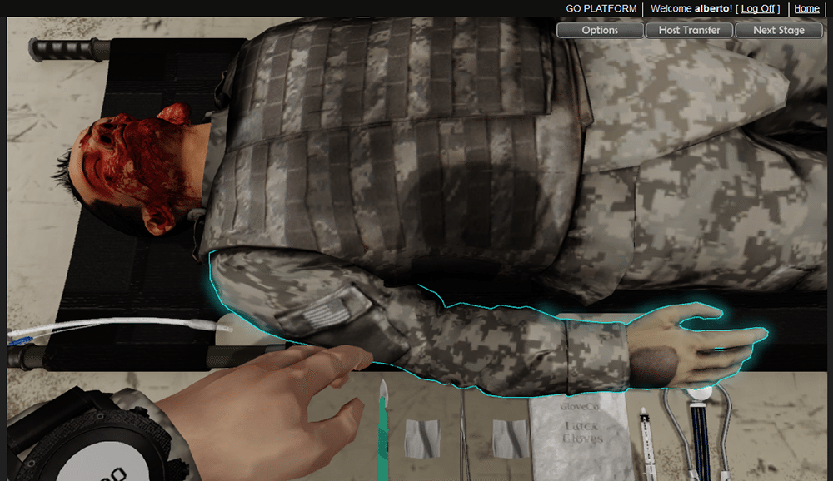

Effective team coordination is critical for the safe delivery of care. Development of these skills requires training and practice in an interactive team-based environment. A three-dimensional serious game environment provides an engaging and cost effective alternative to other interactive training solutions such as human patient simulation. In this project we are developing a three-dimensional interactive networked system for training of military health care team coordination skills. Although this is a development project with no formal experimental hypothesis, we will conduct qualitative evaluations of the design specification and alpha and beta versions of a demonstration prototype. Ease of use, practicality, scope, and effectiveness of the training system will be assessed through heuristic analysis by experts in team training.

Our specific aims include:

- Development of an immersive environment software platform for training of health care skills.

- Content development for the training of team coordination skills.

- Prototype of the 3D immersive environment using a military trauma scenario for the purposes of proof-of-concept.

- Planning for an assessment of ease of use and efficacy through an experimental trial at Duke University Medical Center.

The development of 3DiTeams will provide an effective solution to the problem of expanding the scope of team coordination skill training in military health care environments. In addition, the software platform we develop will allow for the integration of multiple scenarios and work environments (e.g., training modules) to allow expansion into public health care environments.

[Download Poster | Visit the 3DiTeams Wikipedia Page]

In collaboration with Applied Research Associates (ARA).

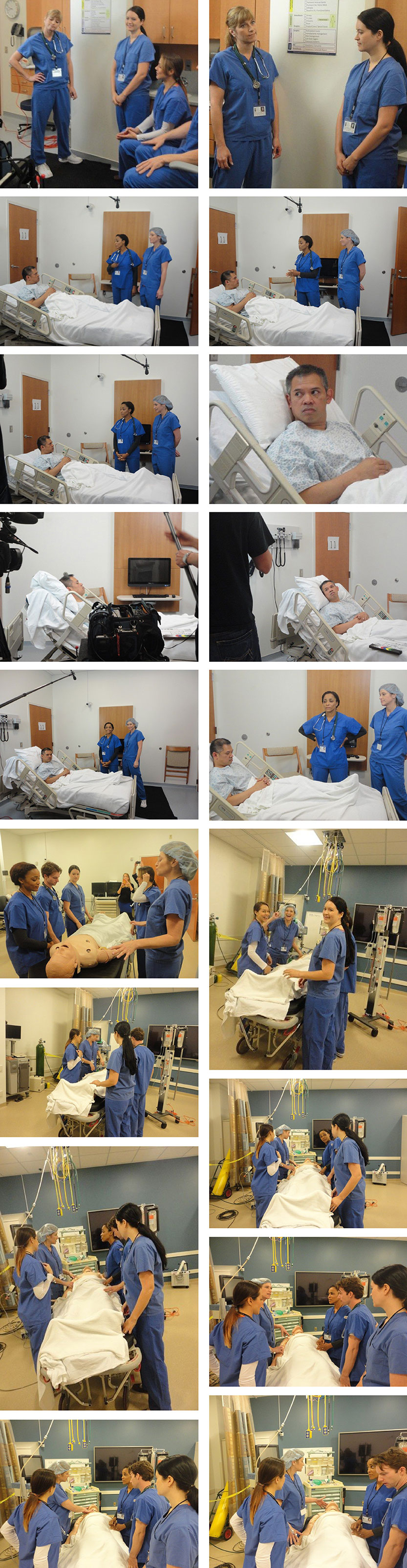

Research suggests that training of team coordination skills will be most effective when it incorporates opportunities for interactive practice of those skills in realistic work environments. There is little experimental evidence assessing and comparing the impact of different interactive training approaches with respect to improving the team coordination skills of participants. A multidisciplinary team of researchers at Duke University Medical Center, in collaboration with University of North Carolina Health Care, is developing several simulation approaches toward interactive training of health care team coordination. In addition, Duke University Medical Center and Virtual Heroes, Inc. are developing a 3D-interactive networked virtual reality team training tool (3DiTeams).

The primary objective of this project is to assess methods of interactive team training in order to design and implement a health care team training program that is:

- Cost effective.

- Feasibly implemented in clinical work and professional health care education environments.

- Has substantial impact on the team coordination skills of trainees.

Specific aims include:

- Pilot testing of 3DiTeams as an alternative to traditional interactive team training.

- Experimental comparison of participants’ improvement in team knowledge and behaviors following training using 3DiTeams and an alternative interactive team training approach (e.g., high fidelity patient simulation).

- Evaluation of the resulting experimental data along with realistic cost estimates to design and pilot test an efficient and effective team training program within Duke University Health System.

This research has the potential to significantly advance the delivery and distribution of effective team coordination training. Resulting experimental evidence should assist health care organizations in choosing or developing methods of training health care team skills. This research will also provide information needed to support a long term goal of developing health care team training that will be exportable beyond Duke. More importantly, the improvements in health care team training that result from this research are expected to have a broader impact on public health through the reduction of health care adverse events and enhancement of patient safety.

Funding: NIH Agency for Healthcare Research and Quality.

Clinical trials play an important role in the advancement of medical care. Over $ 6 billion are spent annually on clinical research. Clinical protocols are increasingly complex. Data inaccuracy in the early phase of a new research trial is a commonly known, but incompletely described component of clinical research. These errors are likely a result of coordinators lacking mastery of the knowledge, skills, and attitudes needed to properly conduct the research protocol. Most historical studies on research integrity consider ethical issues associated with clinical trials and protocol design. Little attention has been paid to issues of data integrity and patient safety in the proper conduct of a trial. Modern theory stresses the importance of interactivity in learning. Simulation is considered a top methodology for learning complex behaviors. The use of high-fidelity patient simulation in clinical research training is expected to improve the coordinator’s acquisition of the knowledge, skills, and behaviors needed to properly perform a protocol. Enhanced coordinator performance is expected to lead to improved data integrity and heightened patient safety. Recent efforts in our laboratory demonstrate marked improvement in coordinator confidence following high-fidelity simulation training (in their ability to properly conduct the trial). Objective measures of coordinator competence are now needed to properly assess the effects of simulation training on research integrity. One objective method of assessing coordinator competence is through queries of errors in the protocol’s data record. The goal of this study was to first define a taxonomy to categorize and quantify errors rates in a reproducible fashion. The taxonomy was then used to define error rates and the learning curve in a recently completed multi-center trial. This project provides the groundwork for future studies investigating the impact of high-fidelity simulation on the competent performance of a clinical trial.

Funding: NIH Office of Research Integrity / National Institute of Neurologic Disorders and Stroke.

As part of her doctoral dissertation, Dr. Noa Segall (under the direction of Dr. David Kaber, North Carolina State University, and guidance of Dr. Wright and Dr. Taekman in the Duke Human Simulation and Patient Safety Center) developed a computerized system for detection, diagnosis, and treatment of perioperative myocardial ischemia and infarction (MI).

The development approach involved:

- Performing a hierarchical task analysis to identify anesthetist procedures in detecting, diagnosing and treating MI.

- Carrying out a goal-directed task analysis to elicit goals, decisions, and information requirements of anesthetists during this crisis management procedure.

- Coding the information collected in the task analyses using a computational cognitive model.

- Prototyping an interface to present output from the cognitive model using ecological interface design principles.

Validation of the decision support tool involved subjective evaluations of the tool and its interface design through an applicability assessment and a usability inspection. For the applicability assessment, three expert anesthesiologists were recruited to observe the tool perform during two hypothetical scenarios, hypotension and MI. They provided feedback on the clinical accuracy of the information presented and all three experts indicated that, further refined, they would use the tool in the operating room. Heuristic evaluation was employed to inspect the usability of the interface. Two usability experts and the three anesthesiologists were asked to identify human-computer interaction design heuristics that were violated in the interface and to describe the problems identified. The reviewers commented on the use of fonts and colors, medical terminology, organization of information, and more. Future efforts by our research team will incorporate the use of more flexible interface design software to develop user interfaces with a wider range of presentation and interaction methods.

Funding: Department of Anesthesiology, Duke University Medical Center; Department of Industrial Engineering, North Carolina State University

Training of healthcare research personnel is a critical component of quality assurance in clinical trials. Interactivity (such as simulation) is desirable compared to traditional methods of teaching. We studied subjective assessments of confidence for clinical research coordinators following interactive simulation as a supplement to standard training methods. Our initial evaluations revealed that ratings of confidence increased significantly after the simulation exercise compared to pre-exercise ratings. Significant improvements were found along all three dimensions of Bloom’s Taxonomy including affective, psychomotor, and cognitive scales. We suggest that simulation exercises should be considered when training study coordinators for complex clinical research trials.

Funding: Department of Anesthesiology, Duke University Medical Center.

One major limitation in the use of human patient simulators in anesthesiology training and assessment is a lack of objective, validated measures of human performance. Objective measures are necessary if simulators are to be used to evaluate the skills and training of anesthesia providers and teams or to evaluate the impact of new processes or equipment design on overall system performance. There are two main goals of this project. The first goal is to quantitatively compare objective measures of anesthesia provider performance with regard to their sensitivity to both provider experience and simulated anesthesia case difficulty. We will compare previously validated measures of anesthesia provider performance to two objective measures that are fairly novel to the environment of anesthesia care: an objective measure of provider situation awareness and a measure of provider eye scan patterns. The second goal of this project is to qualitatively evaluate the situation awareness and eye tracking data to identify key determinants of expertise in anesthesia providers. These determinants of expertise may then be used to further enhance objective measures of performance as assessment tools and to inform training of anesthesia providers. This project is funded by the Anesthesia Patient Safety Foundation and is the recipient of the Ellison C. Pierce, Jr. Education Research Award. https://www.apsf.org/grants-and-awards/grant-recipients/

Training of healthcare research personnel is a critical component of quality assurance in clinical trials. Interactivity (such as simulation) is desirable compared to traditional methods of teaching. We are studying subjective assessments of confidence for clinical research coordinators following interactive simulation as a supplement to standard training methods. Our initial evaluations revealed that ratings of confidence increased significantly after the simulation exercise compared to pre-exercise ratings. Significant improvements were found along all three dimensions of Bloom’s Taxonomy including affective, psychomotor, and cognitive scales. We suggest that simulation exercises should be considered when training study coordinators for complex clinical research trials.

This project, funded by the Anesthesia Patient Safety Foundation (APSF), evaluates perioperative data for effects of time of day and surgery duration on the incidence of anesthetic adverse events (AEs). While the effects of fatigue on clinical performance are measurable, these decrements in performance have not been clearly linked to adverse clinical outcomes for patients. Potential risk factors for anesthetic mishaps that may be associated with fatigue include the time of day that surgery takes place and the duration of surgery. Data from a perioperative “Quality Improvement” (QI) database used by the Duke University Medical Center containing details from over 86,000 surgical procedures is being coded and analyzed for time of day and surgery duration effects. The project involves review of the QI event labels and associated free text by Anesthesiologists to categorize QI documentation into specific types of Adverse Events. Additional risk factors for AEs such as patient characteristics and surgical complexity will be included in the analysis as covariates.

This project, funded by the National Board of Medical Examiners (NBME) seeks to evaluate assessment tools used in other team performance contexts for the measurement of medical student teamwork skills within a small group cooperative learning environment and in a simulated patient care environment. Researchers in the health care industry are increasingly aware of the importance of teamwork skills and advocate a wide variety of training programs related to team coordination. However, these programs tend to be focused toward specialty and continuing education. While the assessment of medical students has covered areas such as interpersonal and communication skills, these assessments generally focus on the student’s interaction with the patient and do not assess team skills in relation to working with other health care providers.

In this research, we hope to answer the following questions:

- Will assessment tools used in other team performance contexts adequately assess individual medical student team skills?

- Can these skills be assessed in naturally occurring team learning environments?

- Do the results of the teamwork skills assessments reflect actual team performance or outcome in scenarios using a human patient simulator?

Assessment measures to be evaluated include self rating of team skills, peer rating of team skills, observer ratings of team skills, and analysis of communication content. We will compare results of measures in the small group environment with measures in a simulated team patient care exercise. We will also investigate the relationship between individual team skill assessment measures and objective measures of team performance in the simulated scenario to determine whether the skill assessment measures are predictive of actual care performance.

The Duke Human Simulation and Patient Safety Center has been involved in usability testing of medical equipment devices such as infusion pumps. Ongoing efforts seek to assess the usability of medical devices under stressful situations (such as high time pressure) that may be simulated through the use of a human patient simulator.

ILE@D is a three-dimensional, collaborative world accessible from any Internet-connected computer that provides an innovative, interactive “front-end” to distance education in the health care professions. ILE@D maximizes face-to-face interactions between teachers and students through self-directed, team-based, and facilitator led preparatory activities in the virtual environment. While in the beginning phases of development, we have a grand vision for building a generic education framework which can support a variety of inter-professional healthcare training scenarios. The Duke Endowment is providing $2.8M in funding over the next 5 years to initiate this effort with the goal of making the platform broadly available to the healthcare community.

We are designing a flexible architecture to allow creation of a library of locales, tools, medicines, procedures, patient profiles, events, and avatar responses. Once these libraries are created, they can be shared across a range of scenarios. The ILE@D framework, which supports open standards, and a plug-in architecture will become the hub of virtual health care education. Duke sees this as a key opportunity to change the game by allowing students to gain knowledge in the ways that best suite them (individual, online teams, and in person team based learning environments). ILE@D is seen as a key component of a larger Team Based Learning curriculum which is shared between the Duke University School of Medicine and Duke-National University of Singapore Graduate Medical School. This tool allows students to learn procedures and technologies offline so that class time is devoted towards collaborative exploration rather than static lectures.

- Anywhere, anytime access to virtual, interactive health care education that encourages collaboration and communication regardless of location

- Engaging 3D virtual world that immerses the student in realistic health care scenarios, allowing them to practice their skills and reasoning individually or groups

- Adheres to existing standards for virtual worlds and Learning Management Systems

- Leverages existing educational resources and investments (e.g. BlueDocs)

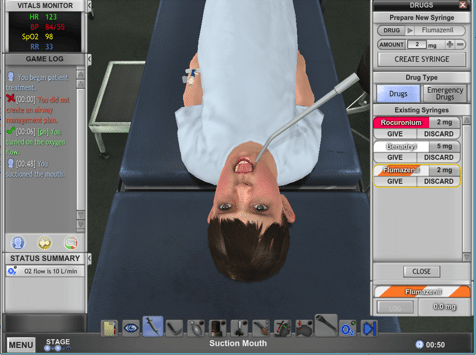

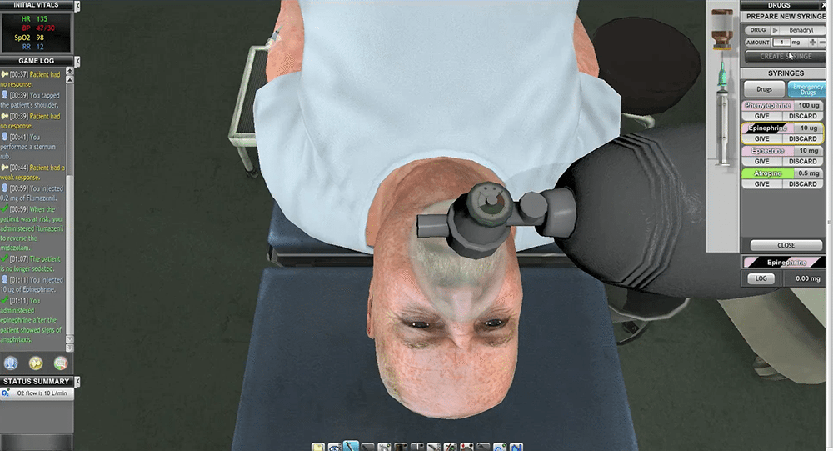

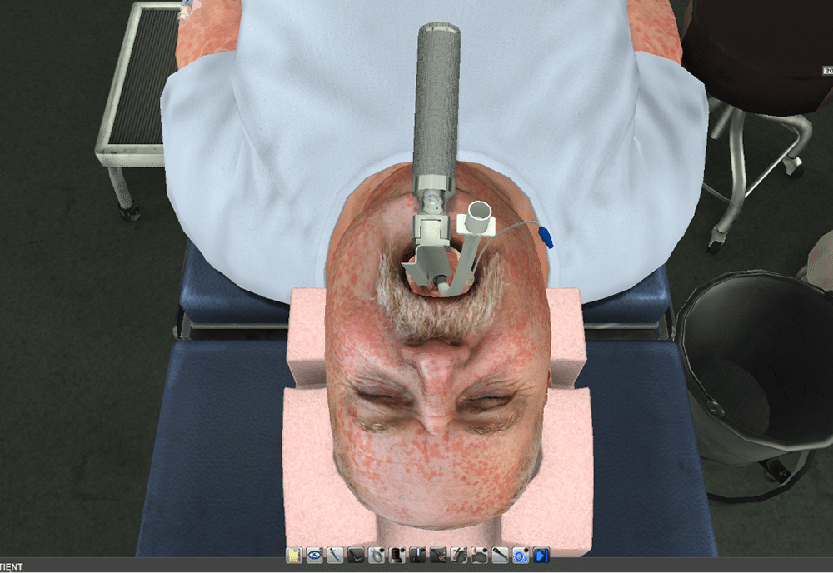

The Pre-Deployment Anesthesia and Anaphylaxis Training System (PDAATS) is a serious game designed to teach and refresh the cognitive skills of rapid sequence intubation and moderate / deep sedation to non-anesthesia providers.

This single-player, first-person simulation enables clinicians to evaluate, sedate, and medically manage ten different patients each with a unique set of medical and contextual challenges. In each scenario, learners must identify and interpret relevant findings in the patient’s background and physical exam to determine primary and secondary sedation plans.

Each patient avatar is innervated by the HumanSim physiology engine which accurately and dynamically generates heart rate, respiratory rate, blood pressure, and blood oxygen saturation depending on the learner’s actions. Cognitive fidelity is further enhanced through an innovative interface that allows learners to select medications, medication dosages, and delivery.

Following the simulation, a robust after action review (AAR) that allows players to reflectively learn from their performance.

PDAATS was produced with Applied Research Associates (ARA) with funding from the Army’s Telemedicine and Advanced Technology Research Center (TATRC).

In collaboration with Applied Research Associates (ARA).

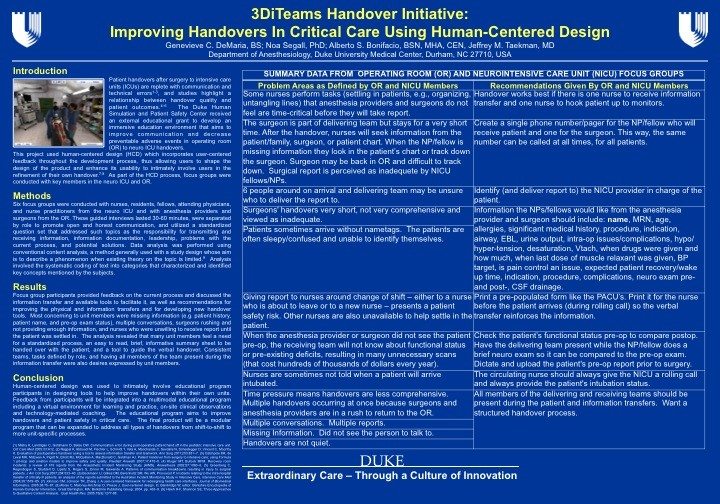

Patient transfers (handovers) from surgery to intensive care units (ICUs) are known to be fraught with communication and technical errors: barriers in care which multiple studies highlight a relationship between the quality of handovers and patient outcomes.

The Human Centered Design of Handovers/Virtual Handovers project aims to improve communication and decrease preventable adverse events related to OR to Neuro-ICU handovers. The project will achieve this through the development and use of a web-based, modular, multi-modal education platform which will include instructional videos and an interactive, immersive “virtual handover game.” As a modular platform, material can be added to address all types of handovers from shift-to-shift to more unit-specific processes.

The virtual handover game was developed using a human centered design: a methodology that incorporates feedback from users throughout the development process. This process allows users to shape the design product and enhance its usability, as well as allowing them to be closely involved in the refinement of their own handover process. As part of the HCD process, focus groups were conducted with key members in the neuro ICU and OR. Feedback from the focus groups were then analyzed via conventional content analysis and integrated into the educational program.

Feedback collected through the focus groups and analyzed via conventional content analysis will be integrated into a multimodal educational program including 3DiTeams (a virtual environment for learning and practice), on-site clinical observations and technology-mediated coaching. This educational program aims to improve handover quality and patient safety in critical care. The final product will be a modular program that can be expanded to address all types of handovers from shift-to-shift to more unit-specific processes (in this case OR to Neuro ICU).

After the launch of the education program, an educational research study will be conducted to evaluate the role of mentorship in the retention of teamwork behaviors.

The study aims to provide pilot data for the research hypothesis that mentoring will slow the decay of learned teamwork and communication behaviors to a greater extent than self-study alone or no intervention.

Project Team:

- Dr. Jeffrey Taekman – Principal Investigator

The HSPSC Team and Executive Committee:

- Dr. Kathryn Andolsek

- Dr. Atilio Barbeito

- Al Bonifacio

- Mr. Bob Blessing

- Dr. Chris DeRienzo

- Mrs. Mary Holtschneider

- Dr. Jonathan Mark

- Ms. Marie McCulloh

- Dr. David McDonagh

- Ms. Judy Milne

- Dr. Chitra Pathiavadi

- Dr. Christy Pirone

- Dr. Noa Segall

- Dr. David Turner

- Dr. Melanie Wright

Special thanks to for their support and contributions to this project:

- Duke University Hospital

- Australian Commission on Safety and Quality in Health Care (2010). The OSSIE Guide to Clinical

- Handover Improvement. Sydney, ACSQHC.

- CS&E Handoff Team, UT Southwestern Medical Center

- Durham Patient Safety Center of Inquiry and VA National Center for Patient Safety

- Charles George VA Medical Center, Asheville, NC

- Turnip Video

- BreakAway Games

- All of our partners who donated their time, effort, and talent to making this project possible

Funding provided by Pfizer and Abbott.

In collaboration with BreakAway, Itd.

Handover Types

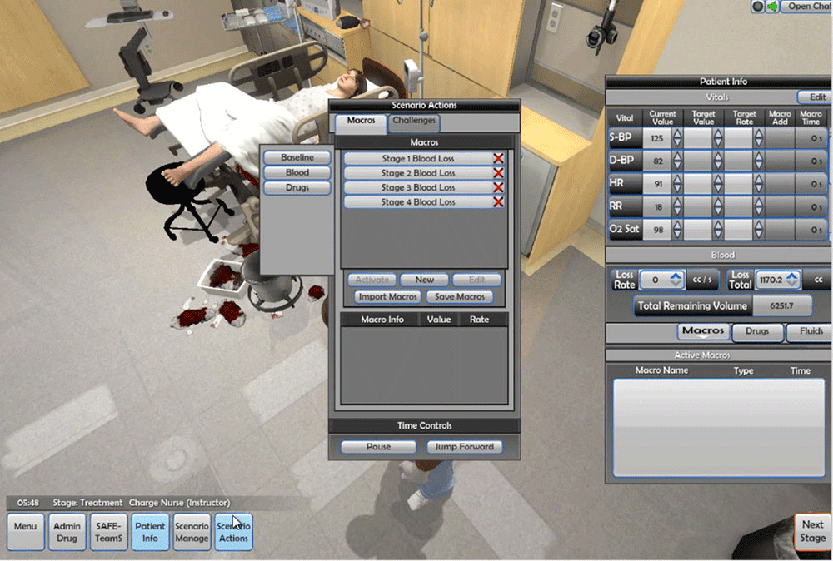

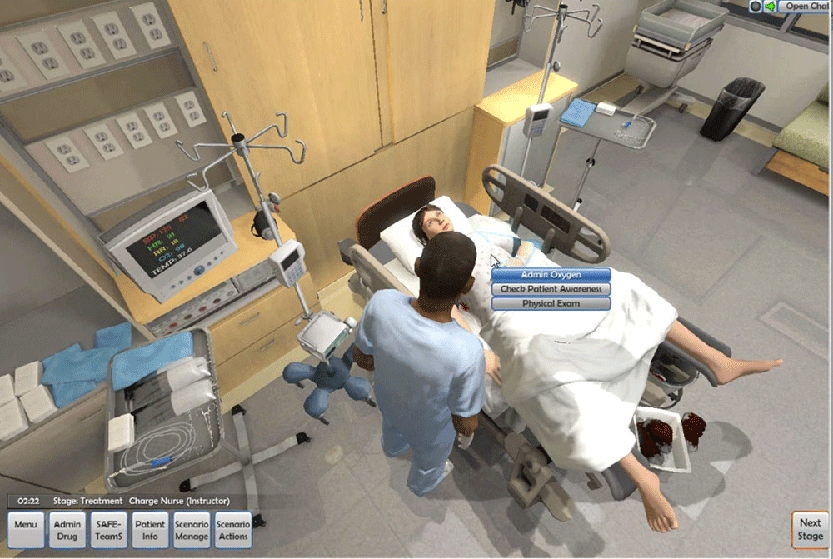

Post Partum Hemorrhage (PPH) is a 3D, multi-player, instructor-facilitated virtual simulation platform designed to train teams of clinicians in the medical management of post-partum hemorrhage as well as effective teamwork and communication behaviors.

Users may choose to participate in the exercise as physicians or nurses, the facilitator, or choose to be invisible as an observer. A user may even elect to be the voice of the patient.

PPH is set in a realistic virtual birthing suite where learners can freely move about and interact with the patient, equipment, supplies, and other items in the environment through an intuitive interface of on-screen buttons or item highlights. The game allows learners to perform medical procedures such as fundal massage as well as deliver routine and emergency medications and fluids.

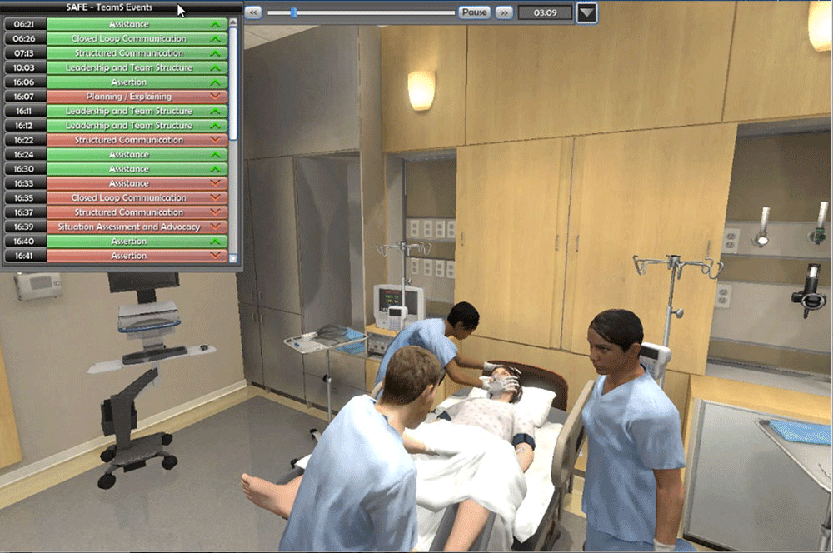

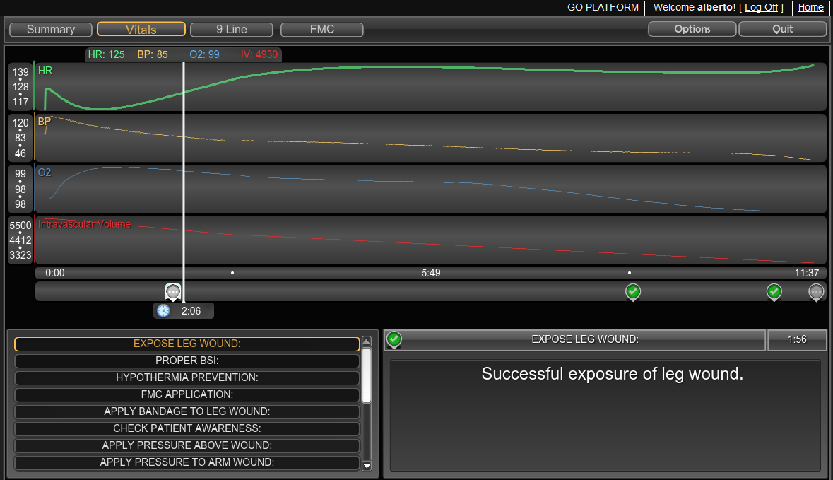

The game’s facilitated model provides the learning flexibility of traditional high-fidelity manikin simulation. Dashboards in the game allow facilitators to control every aspect of the patient’s physiology as individual parameters (figure 2) or through the use of macro buttons to quickly move from one patient state to another. Facilitators also have the ability to control the patient’s vital signs, lab results, and rate of hemorrhage as well as the environment to include the delivery of blood products.

The SAFE-TeamS / TeamSTEPPS feature allows observers and other participants to tag game actions as ʻgoodʼ or ʻbadʼ that can then be reviewed during the games robust Recap stage. In the recap stage an interactive replay of the entire scenario , the instructor leads a discussion about game actions and compares gameplay to specified protocol.

PPH uses the Unreal Engine and was built on the ILE@D Lite platform.

In collaboration with Applied Research Associates (ARA).

Prior to the finalization of a complex protocol and/or during the start up of a study that has unique training needs, we will institute the use of the Duke Human Simulation and Patient Safety Center (HSPSC) for two distinct purposes; protocol “usability”/design, and immersive training of research personnel.

Researchers in the HSPSC have demonstrated phenomenon consistent with learning curves in the data record of several clinical trials1 and have described the benefits of applying simulation techniques in the development and start-up of clinical trials.2,3 There is emerging evidence that learning curves may negatively influence the outcome of large multicenter clinical trials4 and the frequency of adverse events.5The HSPSC is pioneering the use of human factors methods and simulation to mitigate learning curves in clinical trials. The application of our methods provide long term benefits to patients, trainees, and sponsors, including protection of the sponsors investment in their drug or device, the reduction of research cost and most importantly, reducing the potential risks to real patients. Simulation (mannequin-based or actor-based) is typically used in two ways: 1) protocol walk-throughs and 2) research personnel training.

Walkthroughs are used to assess protocol design. Protocol developers observe their study performed by research personnel in a high-fidelity simulated clinical setting prior to subject enrollment. Viewing (and reviewing) walkthroughs of clinical protocols helps uncover issues with design, timing, complexity, and practicality. Potential protocol errors and inefficiencies are highlighted and can be corrected before the protocol is implemented, thereby minimizing subject exposure and the number of protocol amendments—ultimately impacting both safety and cost.

In addition, skill acquisition of the research personnel is a key factor in the safe and effective deployment of a clinical research protocol. Proper performance of a clinical trial depends on complex behaviors. Complex human behaviors demonstrate ‘learning curves’, where performance improves with repetition. Just like a sports team, proficiency is gained through practical experience. Interactive training in a simulated environment provides the opportunity for Investigators, coordinators, and monitors to ‘practice’ without placing subjects or data at risk. Following simulation training, research personnel are closer to optimal performance at the time subject enrollment begins.

Ultimately, we believe our methods improve the chances of a successful trial (through better design and enhanced research personnel performance) while minimizing cost, improving efficiency, and enhancing safety.

Two examples of protocols using the simulation training are:

- A Phase II multicenter, randomized, double-blind, parallel group, dose-ranging, effect-controlled study to determine the pharmacokinetics and pharmacodynamics of Sodium Nitroprusside in pediatric patients, sponsored by the NIH.

- A Phase III, multicenter, randomized study of the safety and efficacy of Heparinase I versus Protamine in patients undergoing CABG with and without cardiopulmonary bypass/ sponsor by sponsored by an industry pharmaceutical company.

References

1. Taekman JM, Stafford-Smith M, Velazquez EJ, Wright MC, Phillips-Bute BG, Pfeffer MA, et al. Departures from the protocol during conduct of a clinical trial: A pattern from the data record consistent with a learning curve. Qual Saf Health Care 2010, Aug 10.

2. Wright MC, Taekman JM, Barber L, Hobbs G, Newman MF, Stafford-Smith M. The use of high-fidelity human patient simulation as an evaluative tool in the development of clinical research protocols and procedures. Contemp Clin Trials. 2005;26:646-659.

3. Taekman JM, Hobbs G, Barber L, et al. Preliminary Report on the Use of High-Fidelity Simulation in the Training of Study Coordinators Conducting a Clinical Research Protocol. Anesth Analg. 2004;99:521-527.

4. Macias WL, Vallet B, Bernard GR, et al. Sources of variability on the estimate of treatment effect in the PROWESS trial: implications for the design and conduct of future studies in severe sepsis. Crit Care Med. 2004;32:2385-2391.

5. Laterre P-F, Macias WL, Janes J, et al. Influence of enrollment sequence effect on observed outcomes in the ADDRESS and PROWESS studies of drotrecogin alfa (activated) in patients with severe sepsis. Critical care (London, England). 2008;12:R117.

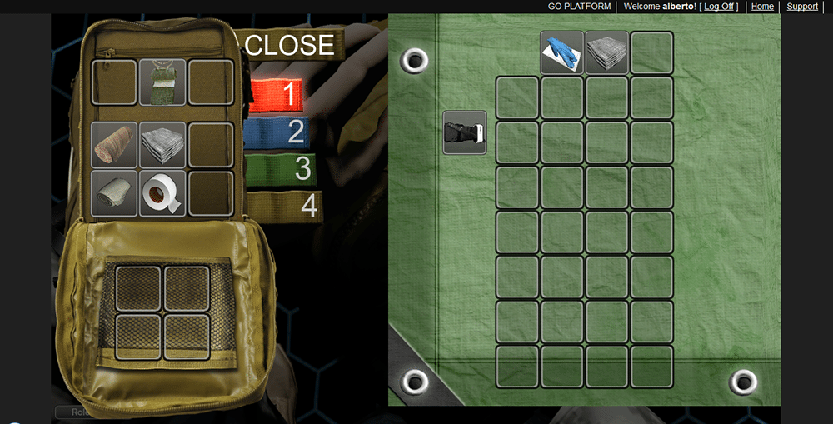

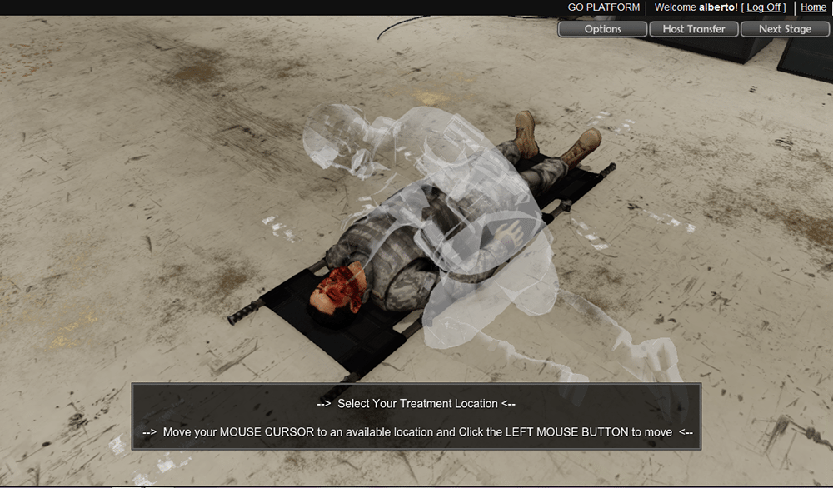

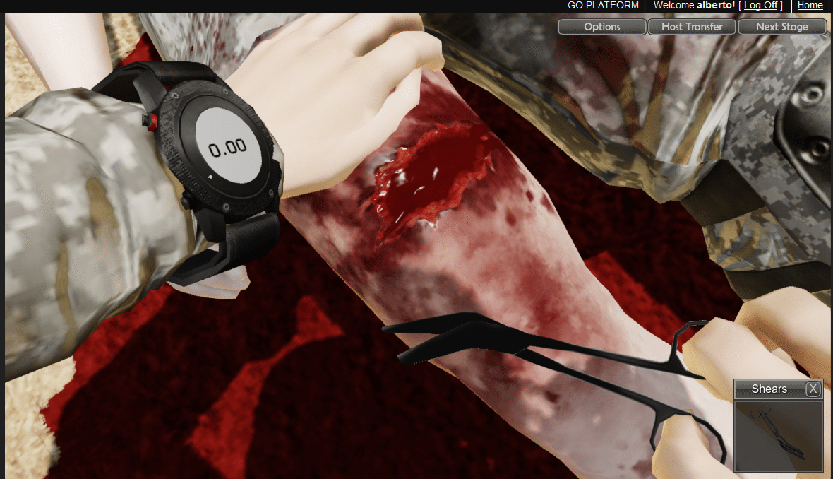

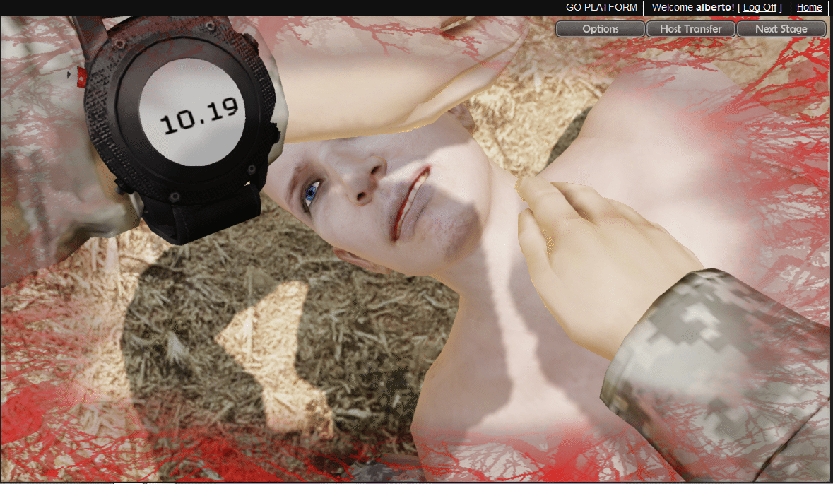

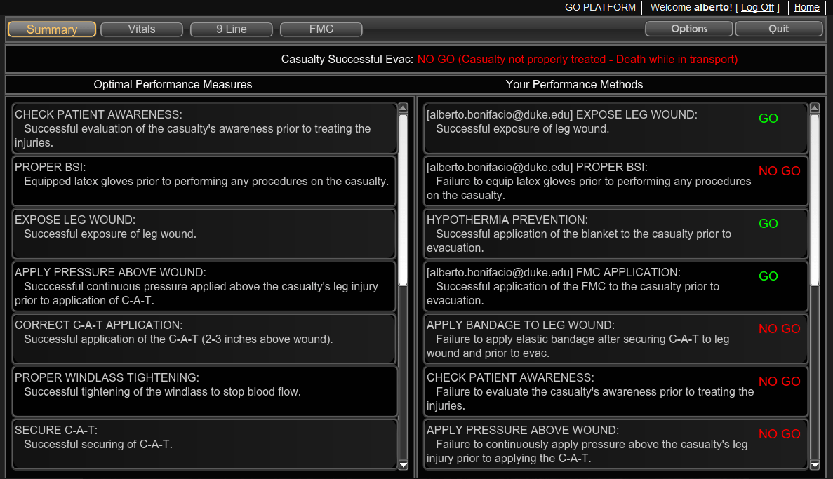

Combat Medic is an exciting new virtual educational program designed to train Army medics and other military medical personnel to manage the top causes of death in the modern battlefield: hemorrhage, airway obstruction, and tension pneumothorax (2014). Designed as a procedural trainer, the game allows medics to cognitively apply, practice, and review Tactical Combat Care, patient management, and documentation in a contextually accurate combat scenario. Combat Medic also allows for the application of other important concepts such as equipment preparation, team organization, and communication through unique and intuitive user interfaces that preserve both contextual, cognitive, and task fidelity.

Learners can either practice independently as a single player or use its multi-player function for facilitator-led and team events. Opportunities to learn reflectively is made possible with virtual pre- and post-game meeting spaces and a robust debriefing platform replete with analysis of task performance and video playback. To maximize deployability and access, the game works from browser plug-ins that are compatible with any recently produced computer.

Combat Medic can benefit medical training and sustainment in a number of ways:

- Combat Medic can be used as a viable alternative training method for individual and collective tasks – especially for medics and units with limited training time and access to resources.

- The game’s accessibility maximizes unscheduled training time by providing learners the ability to practice complex skills at a time and place of their choosing. This tool can be especially useful in for individual task training (crawl phase) as part of a schoolhouse curriculum, cyclical training, or in preparation for field exercises.

- Combat Medic can maximize the mission impact of live or manikin-based events by improving simulation center throughput (increased “first-time goes”); improving pre-event competence allowing for a greater emphasis on collective tasks; and prioritizing access for those who need manikin-based resources the most.

- Combat Medic can be used as a method to assess and validate medical skills. The ability to convene in a shared virtual space from anywhere with internet access can be especially useful for Reservists, National Guardsman, distance learners, and others who are geographically separated.

- Standardized and tailored task requirements will improve the consistency of training within and between different units.

- Combat Medic’s ability to collect performance data from every user can yield valuable insight into Army-wide medic readiness. By capturing and analyzing these data, commanders and training NCO’s will be better able to track medic competency and direct costly training resources to where they can provide the most impact to the mission.

- Leaders can use the game as a virtual sandbox to experiment, develop, and implement new team process and often overlooked tasks such as the packing aid bags.

- Combat Medic can also benefit combat lifesavers, Soldiers in other medical specialties, and all Soldiers to practice buddy aid.

In summary, Combat Medic has the potential to improve individual and command readiness, improve compliance with sustainment requirements, prevent skills decay, improve effectiveness and efficiency of simulation resources, and ultimately save lives.

Project funded by: RDECOM. In collaboration with Applied Research Associates (ARA).

Human Factors Projects

Prospective memory is the human ability to remember to perform an intended action following some delay. Failures of prospective memory (PM) may be the most common form of human fallibility. They have been found to be a significant source of error in aviation and other work domains, but have received little attention in the anesthesia literature. Yet demanding perioperative work conditions, which often require multitasking and are fraught with interruptions and delays, place a heavy burden on the PM of anesthesia providers. For example, distractions – one source of PM errors – account for 6.5% of critical anesthesia incidents. There is a critical need to examine the effect of PM and its failures on patient safety and the care delivery process in the perioperative environment. The first objective of this project is to systematically quantify PM errors of anesthesia providers. An additional goal is to determine to what extent failures of PM contribute to medical errors in this domain.

We interviewed anesthesia providers in order to understand situations and conditions that are conducive to forgetting to perform clinical tasks. Interview questions also probed task types that were more likely to be deferred or omitted and cueing strategies, i.e., methods for recalling tasks at the right time. Interview participants were 19 nurse anesthetists, residents, fellows, and attending anesthesiologists. They provided varied examples of tasks that were delayed or omitted while preparing for surgery (e.g., bringing backup airway devices, checking a lab value, obtaining drugs from pharmacy, and administering antibiotics), during surgery (e.g., obtaining and checking on lab values, acting on abnormal lab values, labeling, reading, and administering drugs, closing off IV bags, and documenting care), and following surgery (e.g., wasting narcotic medications, signing out postoperative patients, and checking a chest x-ray of a patient with a central line). Interestingly, providers discussed a wide array of PM errors: only a few were reported by multiple providers. The factors contributing to forgetting (or deferring) tasks were varied, but time pressure was often cited and many lacked a cue to prompt task execution (e.g., recording urine output). A large majority of the events did not lead to patient harm.

We are currently analyzing interview transcripts to categorize PM failures along several axes, such as cue source (self-initiated or external), causes for task deferrals (disruptions, delays, etc.), and mechanisms for dealing with them. We will use these categories to guide observations and queries of anesthesia providers in the operating room in order to quantify and classify PM failures and distractions that may cause them. We will also analyze databases of anesthesia-related adverse events both retrospectively and prospectively to determine the extent to which PM errors and near misses affect patient safety in the perioperative environment.

PM errors can directly impact patient safety by causing providers to forget to execute tasks in the operating room, or to execute them too late. The proposed work will have significant impact on our understanding of PM as a source of errors in the perioperative environment and will advance our ability to support clinicians’ cognitive work in this complex, busy environment.

Reference

Segall N, Taekman JM, Wright MC. Forgetting to remember: Failures of prospective memory in anesthesia care. International Symposium of Human Factors and Ergonomics in Healthcare. 2014.

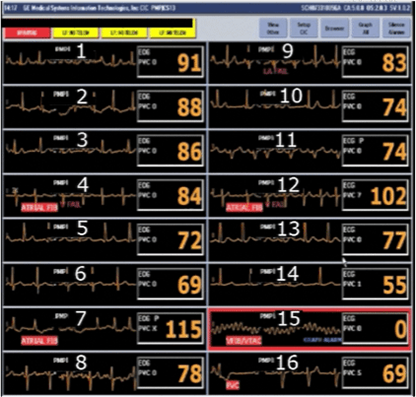

A growing number of hospitalized at-risk patients are being monitored remotely by cardiac telemetry technicians to increase the potential for timely detection of cardiac events. However, decisions regarding the number of patients that a single technician can safely and effectively monitor appear to be primarily based on technological capabilities and not on our understanding of human information processing limitations. Limitations of human visual memory and eye scan rates may place upper limits on the number of patients that a technician can safely monitor. In addition, we know that humans are relatively poor at maintaining attention over long periods of time, and quickly succumb to a vigilance decrement, a reduction in detection performance over time. At Duke University Hospital, we estimate that our technicians see a life-threatening pulseless ventricular tachycardia or ventricular fibrillation (VT/VF) rhythm about once or twice a month. Because these are rare events, they are difficult to study in clinical practice, and it is difficult to assess the effect of different patient loads on performance with respect to prompt response to these life-threatening events. We are completing a grant funded by the Agency for Healthcare Research and Quality, “Workload effects on response time to life-threatening arrhythmias,” in which we used laboratory-based simulation to determine the impact on detection time of increasing the number of patients monitored.

We designed a realistic simulation that replicated the actual tasks performed by remote telemetry technicians at Duke University Hospital. We video- and audio-recorded true patient data with a single simulated patient embedded in the patient set. The technical implementation involved connecting an ECG rhythm simulator into the hospital’s network that transmits physiological signals to remote telemetry monitors. The signal appeared in exactly the same way as it would appear for a real patient. Since multiple patients are monitored simultaneously, the simulated signal appeared on the monitor as one of many signals (Figure 1).

We set up one display with 15 true patients and one simulated patient (Figure 1), another display with 8 patients, and 2 displays with 16 patients each, to simulate 16, 24, 32, 40, and 48 patients (see Figure 2 for the 48-patient setup). We recorded both audio (alarm) and video data for these screen setups for 4 hours. During the audio and video recording period, we simulated one VF arrhythmia. We timed the event to be well into the data collection period (after over 3 hours), to allow participants to become comfortable with the task environment and to possibly experience a vigilance decrement due to a long time on the task, similar to their daily work environment.

For the experiment, study participants monitored patients in a private room (Figure 2). They were randomly assigned to 1 of the 5 patient loads, and completed a 4-hour monitoring session. The study coordinator received and responded to calls made by participants to “the nurse” or “the unit coordinator.” Responses to calls were scripted. Participants were given instructions to call one number for routine calls and a different number for urgent calls (a button press to choose a line). Performance data were recorded manually in real time. During this session, the simulated patient sustained VF, and the time required for the participants to identify this arrhythmia was recorded.

The primary dependent variable is time lapse from the point at which the arrhythmia began to the time of the urgent call. Two secondary measures include (1) an overall percent correct score on the required actions defined for real patients, e.g., documentation and phone calls, and (2) an overall score for interpreting the patients’ ECG strips. Forty-two participants – remote telemetry technicians and nurses from cardiac units (e.g., cardiothoracic intensive care units) at Duke University Hospital and 3 surrounding hospitals – completed the study. Data analysis is ongoing.

The knowledge to be gained from our study will inform efforts to study this problem in real-world cardiac telemetry and, ultimately, help to develop evidence-based standards for remote monitoring. The application of such standards is expected to improve survival after in-hospital cardiac arrest.

Reference

Segall N, Hobbs G, Bonifacio AS, Anderson A, Taekman JM, Granger CB, Wright MC. Simulating remote cardiac telemetry monitoring. 14th International Meeting on Simulation in Healthcare. 2014.

Gains in healthcare efficiency, quality, and safety that are expected through the use of electronic health records (EHRs) will never be achieved if providers are unable to quickly search and interpret information critical to patient care decisions. As the use of EHRs expands, there is a need to better understand the use of patient data to support organization and prioritization decisions and the development of intelligent presentation and search tools. The goal of our research, funded by the National Library of Medicine, is to develop principles for the design of EHRs that will better support clinicians in their work through the application of contextual design methods.

We applied a contextual inquiry approach to data collection by recording providers’ information use activities during actual patient care followed by retrospective verbal protocol interviews. Critical care providers wore an eye tracker during periods of clinical information use (eg, rounding, admissions). Later, while viewing the eye tracking video, they were asked to describe what information they were accessing and why and the use of various information sources. Providers were interviewed about both the situation that was recorded and the translation of specific information use activities to other patients and contexts. As a means of analyzing the interview transcripts, we applied a grounded theory-based content analysis technique. This technique involved open coding of the interview transcriptions in which we grouped similar ideas into themes or categories. Then, through constant comparison, we renamed, reorganized, and redefined emerging themes.

Data were collected from 20 participants – nurses, residents, nurse practitioners, fellows, and attending physicians. Codes that were developed to capture recurring ideas included usability issues, the importance of recent data, trends, legal/financial/regulatory documentation, trust in data, task tracking, and more. The timeframe code, for instance, captured thoughts related to the temporal utility of data, including how long different pieces of data are useful (hours, days, throughout a patient’s stay, etc.), the appropriate frequency of different types of data for establishing trends, and how these information needs may vary for different providers, patients, or contexts. A quote (from a nurse) relevant to this code is “Weights [presented] will be yesterday’s weight, today’s weight, and admission weight which is nice because we trend how fluid overloaded or how dry they are.” Emerging themes that may be useful for generating design principles include meaningful presentation methods (eg, “big picture” flow sheets, lab trends), sort and search functions, and display customization. The timeframe code, for example, is expected to provide insight into methods for presenting data in a meaningful way and how they may be tailored for different situations.

Human-centered design methods are critical to the evolution of EHRs into tools that truly assist clinicians in making the right care decisions rapidly. We used contextual inquiry, a human-centered design ethnographic research method, to study information seeking and documentation activities in critical care. Currently, we are engaged in visioning interviews (which follow contextual inquiry in a contextual design approach). These interviews serve two purposes: (1) they provide an opportunity for interviewees to comment on our eye tracking interview findings and (2) they provide the opportunity to discuss innovation ideas in the context of information presentation to support critical care. We have conducted visioning interviews with 4 critical care providers and one biomedical informaticist. Coding of these interviews is focused on identifying agreement or dissent with respect to our findings from the eye tracking-based interviews and comparing and contrasting the feedback on innovation ideas across participants.

These methods are expected to improve our understanding of information use in the context of healthcare delivery. The findings from our research are expected to have implications for the future design of EHRs to support faster information access and to ensure that critical information is not missed.

References

Wright MC, Dunbar S, Moretti EW, Schroeder RA, Taekman JM, Segall N. Eye-tracking and retrospective verbal protocol to support information systems design. Proceedings of the International Symposium of Human Factors and Ergonomics in Healthcare. 2013; 2(1):30-37.

Segall N, Dunbar S, Moretti EW, Schroeder RA, Taekman JM, Wright MC. Contextual inquiry to support the design of electronic health records. Anesthesiology. 2013: A3151.